Leading Agentic AI Platform

for Autonomous Operations

Blogs & Articles

Implementing Agentic AI: A Technical Overview of Architecture and Frameworks

Fabrix.ai Successfully Concludes First of Its Kind Agentic AI Demo Day and Joins AGNTCY Collective

Fabrix.ai Demo Day Showcases Agentic Platform and AGNTCY Collective Ecosystem Alliance

Some of the open source standards used with AI agents or agentic frameworks

Media Coverage

Fabrix.ai: Bringing Agentic AI to IT Operations and Observability

By Bob Laliberte

AgentOps: Operationalizing Agentic AI

Shailesh Manjrekar

Fabrix.ai brings fresh take on agentic AI operational intelligence

The Evolution From AIOps To AgentOps: Agentic Operational Intelligence Platform

Looking At The Crystal Ball: 2025 Predictions For Agentic AI

Agentic AI Makes Autonomous Enterprises A Reality

GenAI For AIOps Catapults Digital Transformation In Healthcare

Videos

Innovation never stops at Fabrix.ai!

Agentic AI Operational Platform - Product Demo | Shailesh Manjrekar

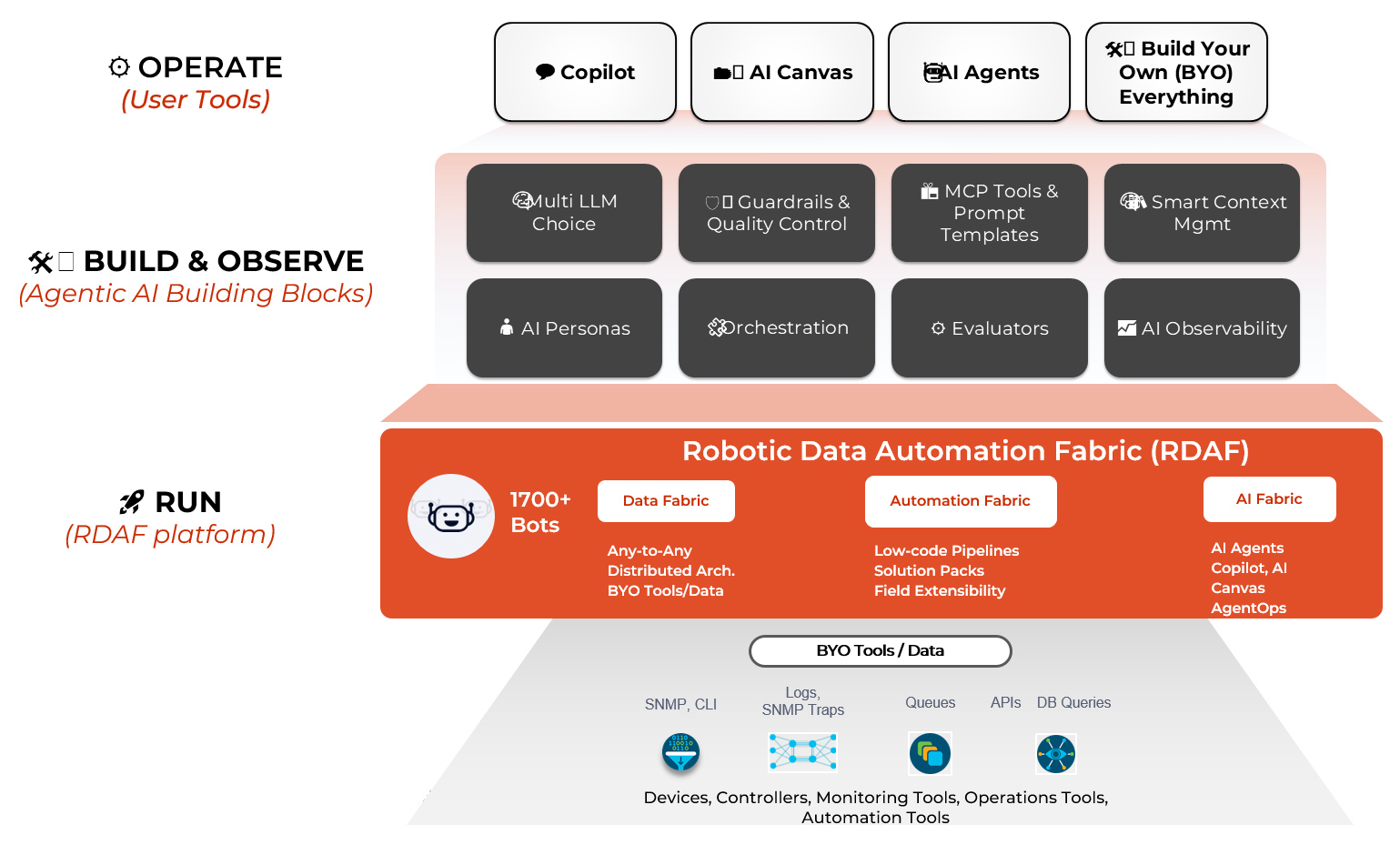

Enterprise Grade AI Platform

From build to observe - guardrailed, auditable agents engineered for enterprise scale.

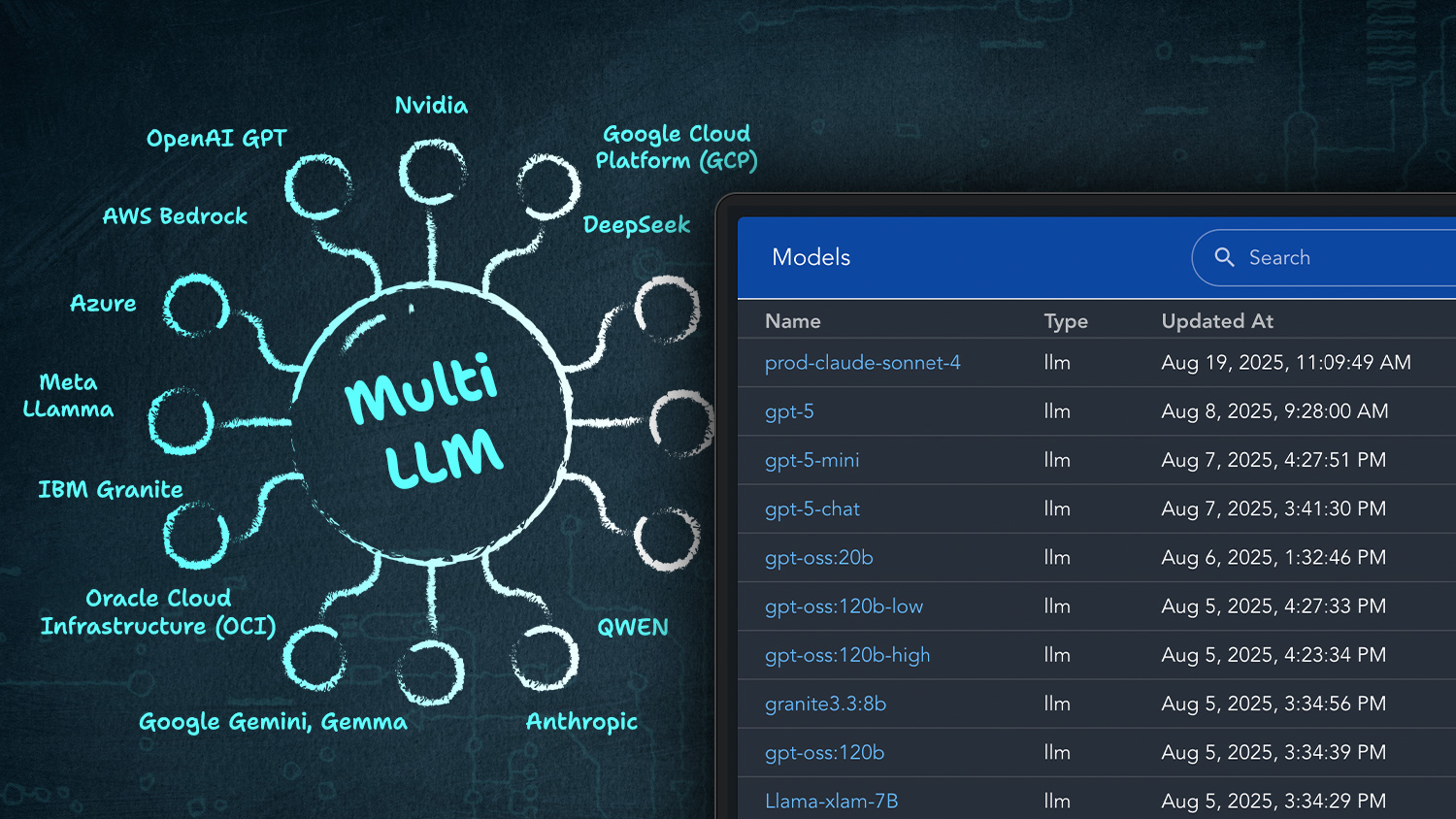

Support featured LLMs. On-Prem or Cloud. Seamless Integration. Nvidia Ready

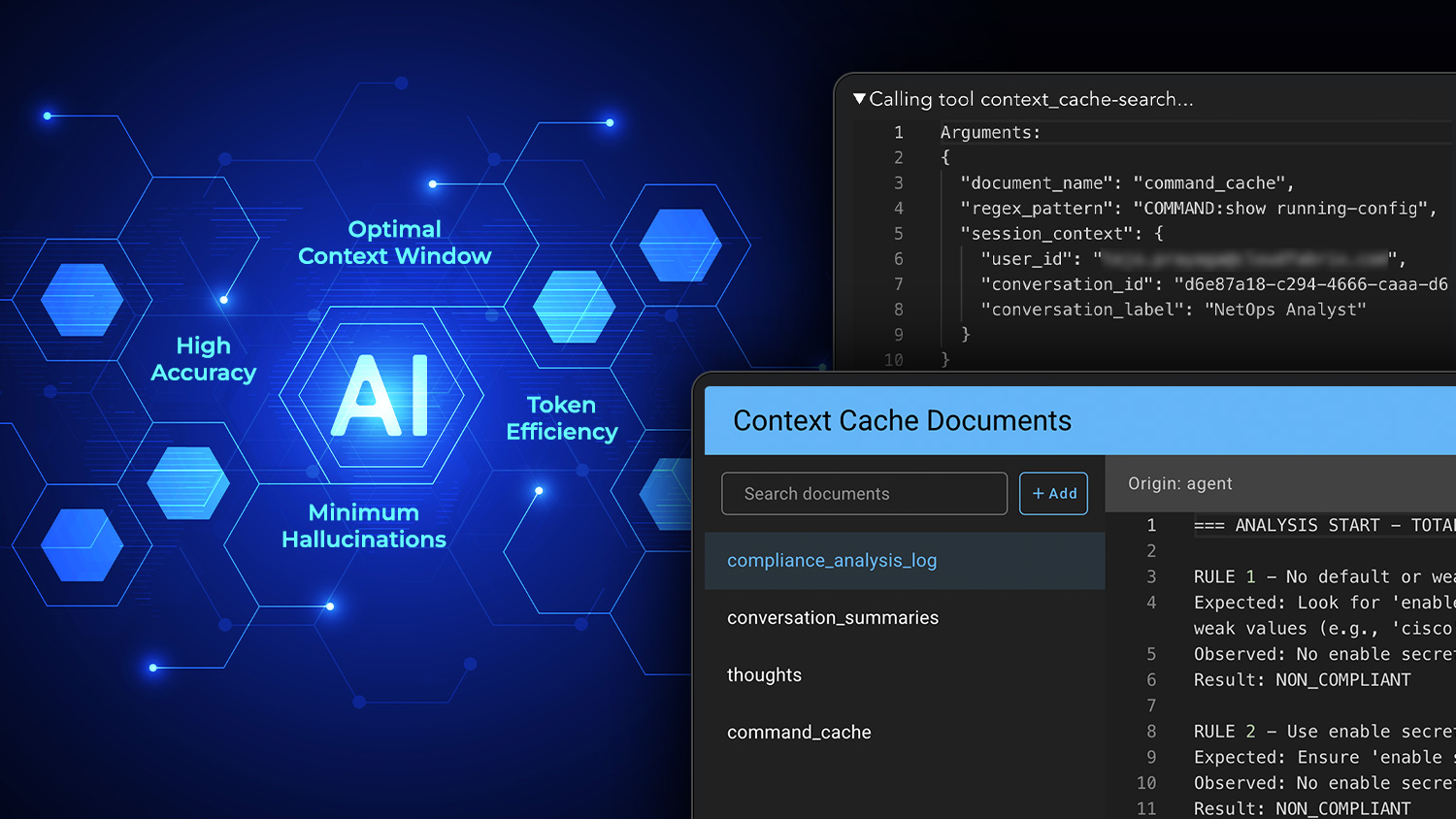

Provides context caching for optimal token usage, allows LLMs to work with very large datasets.

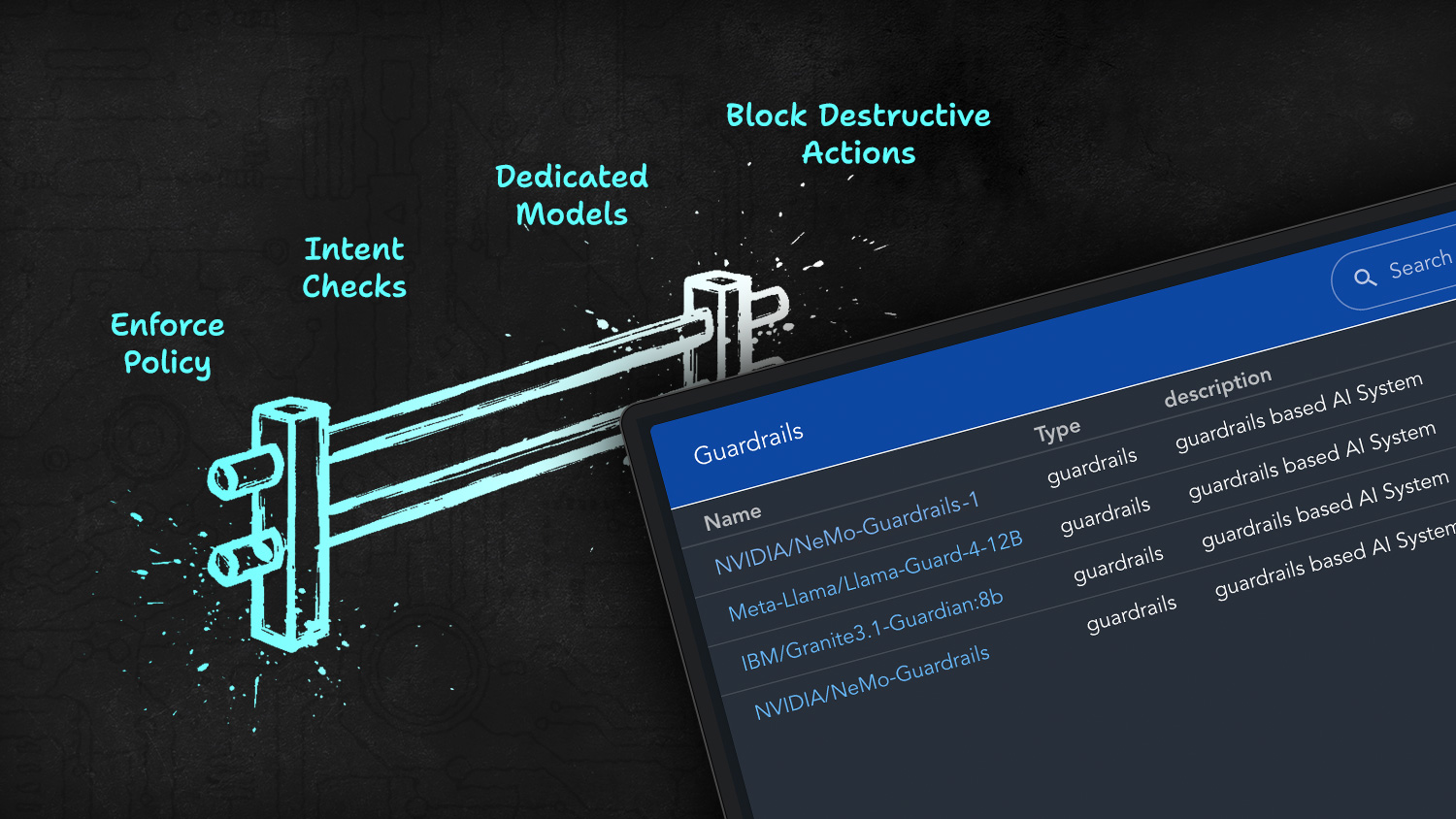

Enforce safety, policy, and intent checks on every run-blocking non-compliant prompts and destructive actions-via seamless integrations with dedicated models and providers

Per-persona data masking for Agentic AI. Sensitive fields are masked before prompts reach the LLM and optionally unmasked client-side when rendering results—end-to-end auditable

Allow LLM access to your data and tools using MCP protocol. Built-in MCP server. Dynamically add new MCP tools with no-code.

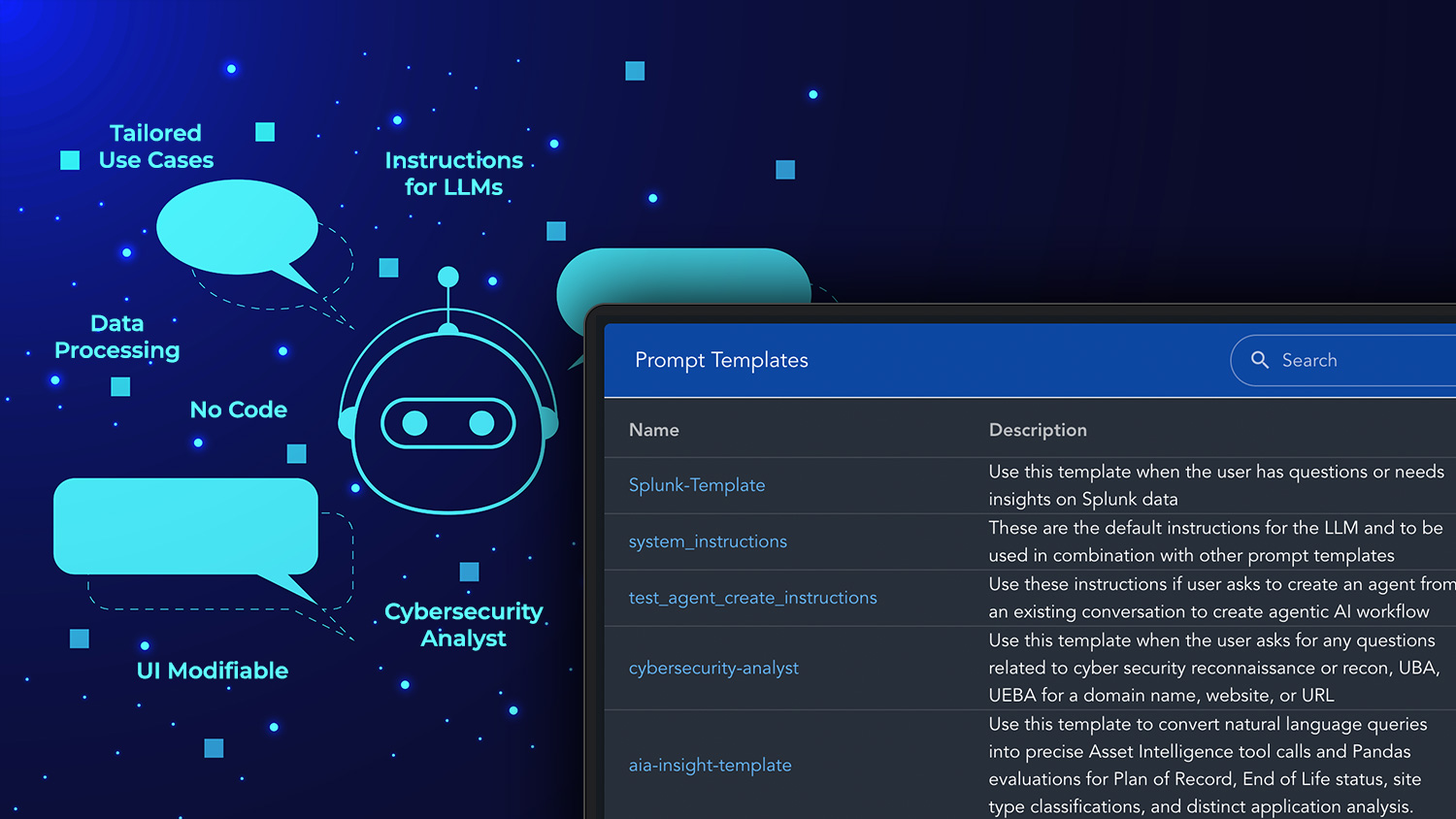

Set of instructions for LLMs to process data and results tailored to your use case. Modifiable from UI. No code.

RBAC‑like scoping presents only persona‑relevant MCP tools and data to LLM, improving accuracy and governance.

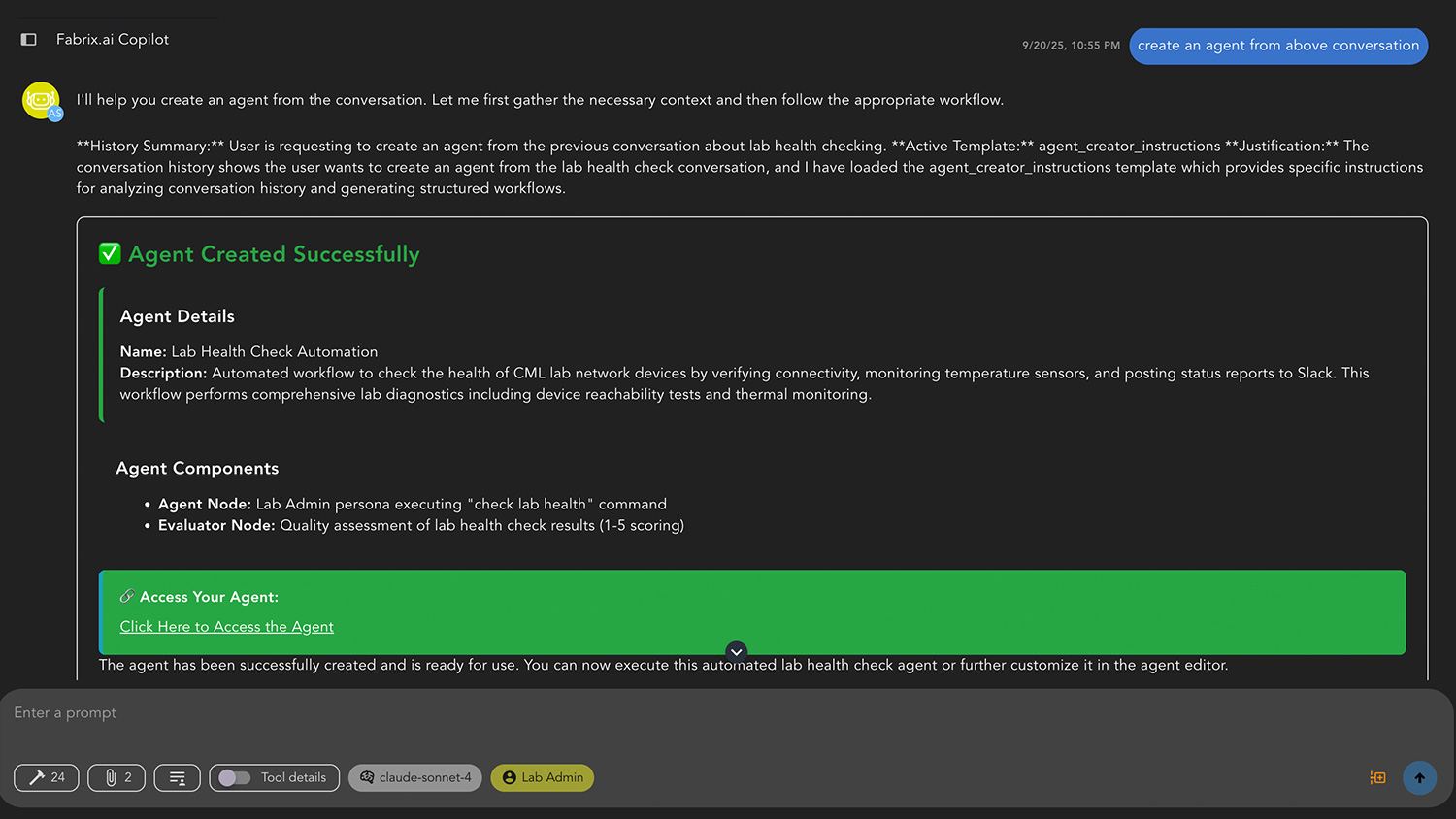

From prompt to production agent—prototype in Copilot, iterate, then simply ask to create Agent with persona, tools, prompts, and workflow auto-packaged

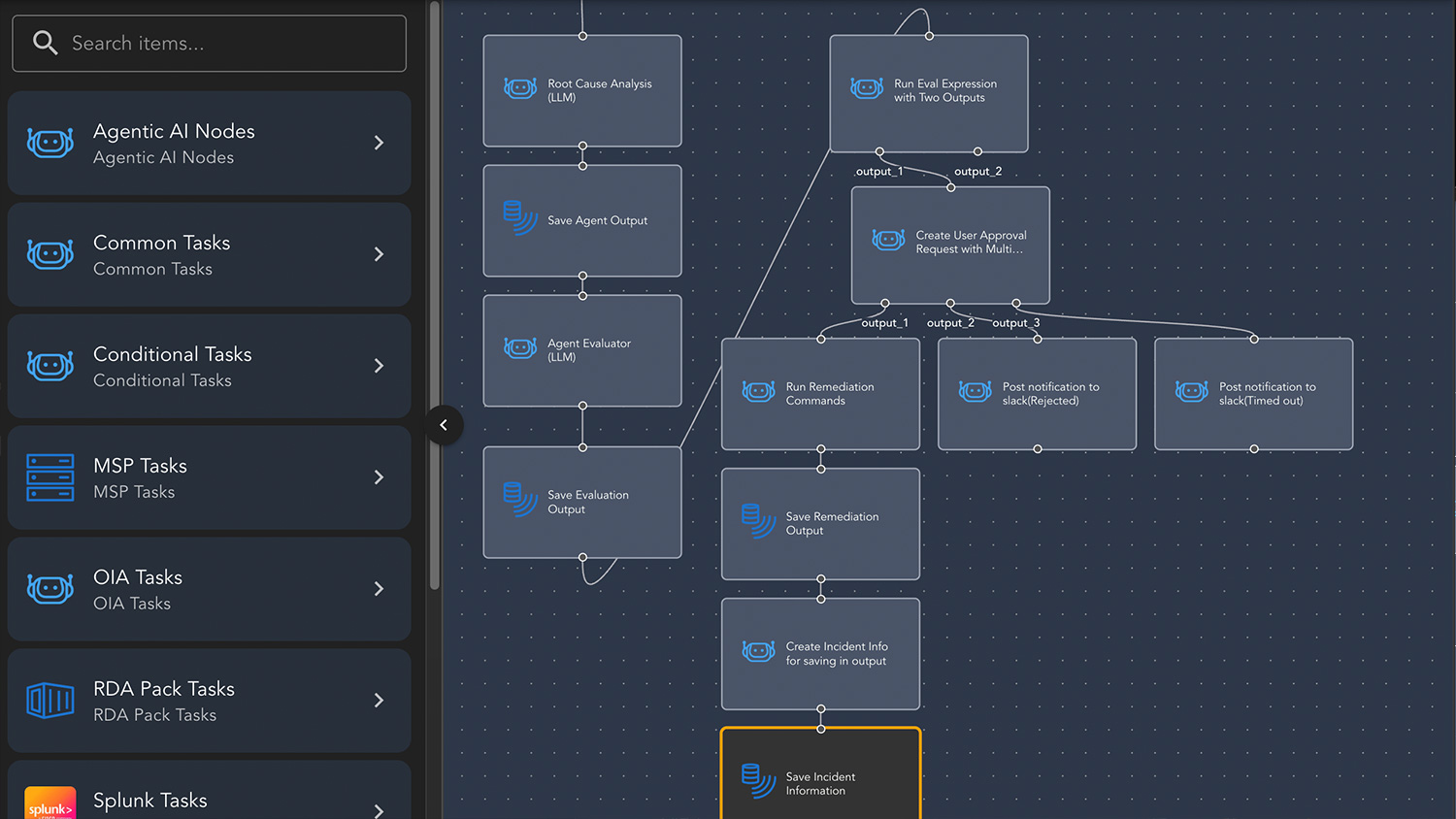

No-code, drag-and-drop approach to easily build and operate agentic workflows. Built-in task library.

Build, Operate & Observe

AI agents purpose-built for IT Operations - end to end, in one platform.

- Multi-LLM Choice — on-prem or cloud. Nvidia-ready

- Guardrails & Data Privacy — block unsafe AI interaction with any of Llama Guard, NVIDIA Nemo Guardrail, IBM Granite Guardian .., PII redaction

- MCP Tools — built-in MCP server; adapters to data/actions. Easily add new tools with no-code

- Prompt Templates — UI-editable LLM instructions to process data and produce desired outcomes

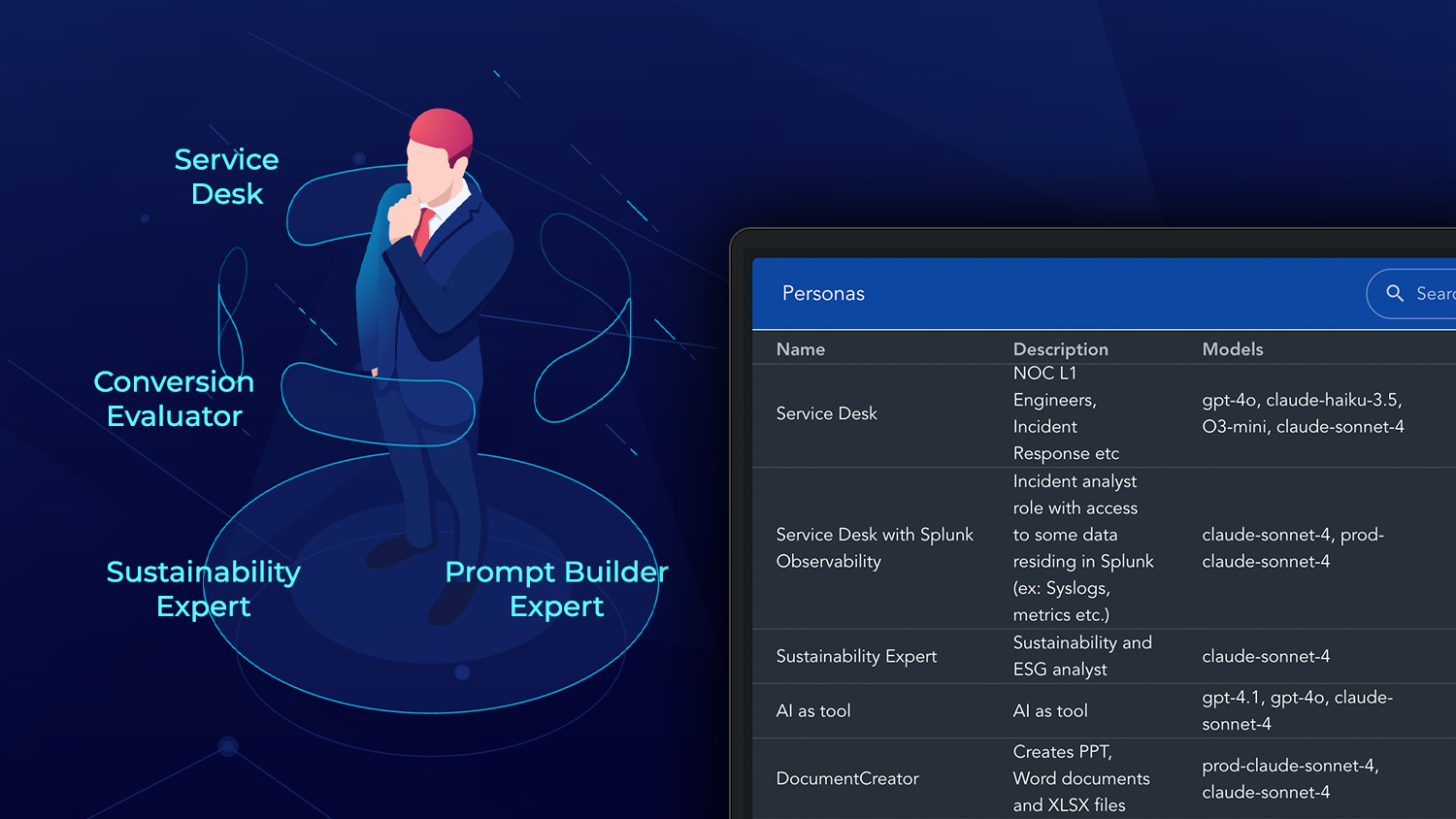

- AI Personas — RBAC for models, tools, prompts

- Smart Context Mgmt — caching/retrieval for large datasets; token & latency savings

- Orchestration — no-code workflows with conditions, approvals, retries, rollback

- AI Observability — traces, tokens, cost, quality, accuracy and coherency metrics for every run

- RDAF Platform: Proven microservices Operational Intelligence fabric unifying Data, Automation, and AI

AgentOps - Operationalizing the Agentic Platform

Build • Operate • Observe — only on Fabrix.ai

AIOps to Agentic AIOps

Capability

Traditional AIOps

Agentic AIOps (Next-Gen)

Why Fabrix.ai Agentic Platform?

Challenge

Fabrix.ai Solution

AI Personas & Use Cases

The platform provides certain workflows for operational personas, but can be adapted to any personas and agentic workflows.

Agents Built for Every Ops Use Case

Fabrix.ai

Gen AI Copilot for Observability & AIOps

From Low Code to No Code!

Fabrix.ai: Bringing Agentic AI to IT Operations and Observability

Fabrix.ai aims to close that gap with its Agentic AI–driven operational intelligence platform. In a recent discussion, Shailesh Manjrekar, Chief AI and Marketing Officer at Fabrix.ai, outlined how the company’s approach, built on data fabrics, intelligent agents, and low-code operations, seeks to transform IT operations by combining reasoning and action with enterprise-grade guardrails.

Quick Start

How to Get Started with Fabrix AI Agents

Select your preferred AI deployment model:

- Cloud-based LLMs: Use OpenAI, Anthropic, or other public APIs. Ideal for agility and ease of access.

- On-premise / Self-hosted LLMs: Deploy open-source models (e.g., LLaMA, Mistral) within your secure infrastructure. Best for data sovereignty, compliance, or low-latency edge scenarios.

- Fabrix.ai supports hybrid and multi-LLM architectures.

Fabrix.ai Professional Services offers:

Need support with private LLM hosting or advanced agent configuration?

- Infrastructure setup for private LLMs

- Agent deployment and orchestration guidance

- Integration with observability and NOC environments

Accelerate deployment using pre-built agents for common telecom and enterprise use cases:

- RCA Agent: Performs automated root cause analysis across network and IT domains

- Anomaly Detection Agent: Identifies behavioral anomalies in KPIs, metrics, or events using AI/ML

- Service Migration Agent: Assists in migrating services from legacy to modern platforms (e.g., MPLS to SD-WAN, 3G to 5G) with dependency mapping, risk detection, and rollback planning

Custom agents allow you to embed Fabrix.ai intelligence into your unique workflows.

Steps to Build:

- Choose a template (e.g., RCA, Migration, Compliance, Detection)

- Define the goal or operational intent of the agent

- Use the prompt builder to shape LLM responses and behavior

- Attach relevant data sources, telemetry, and thresholds

- Configure constraints, escalation logic, or feedback loops

Fabrix.ai includes a powerful MCP (Model Context Protocol) server that exposes:

- Data pipelines and enriched telemetry

- Automation workflows and runbooks

This allows external agents, tools, or orchestration layers to seamlessly access and interact with Fabrix.ai’s real-time intelligence. Additionally, Fabrix.ai agents can:

- Discover and collaborate with other Fabrix.ai or third-party agents

- Participate in multi-agent workflows across network, app, and service domains

- This enables powerful, scalable, and decentralized decision-making across the enterprise or service provider ecosystem.

Launch your agent in a target environment (NOC, service domain, pilot region)

- Test with synthetic or historical data

- Use the explainability layer to observe how decisions are made

- Iterate on prompts, thresholds, or workflows based on real-time performance

- Agents can run autonomously or with approval workflows in place for sensitive actions.