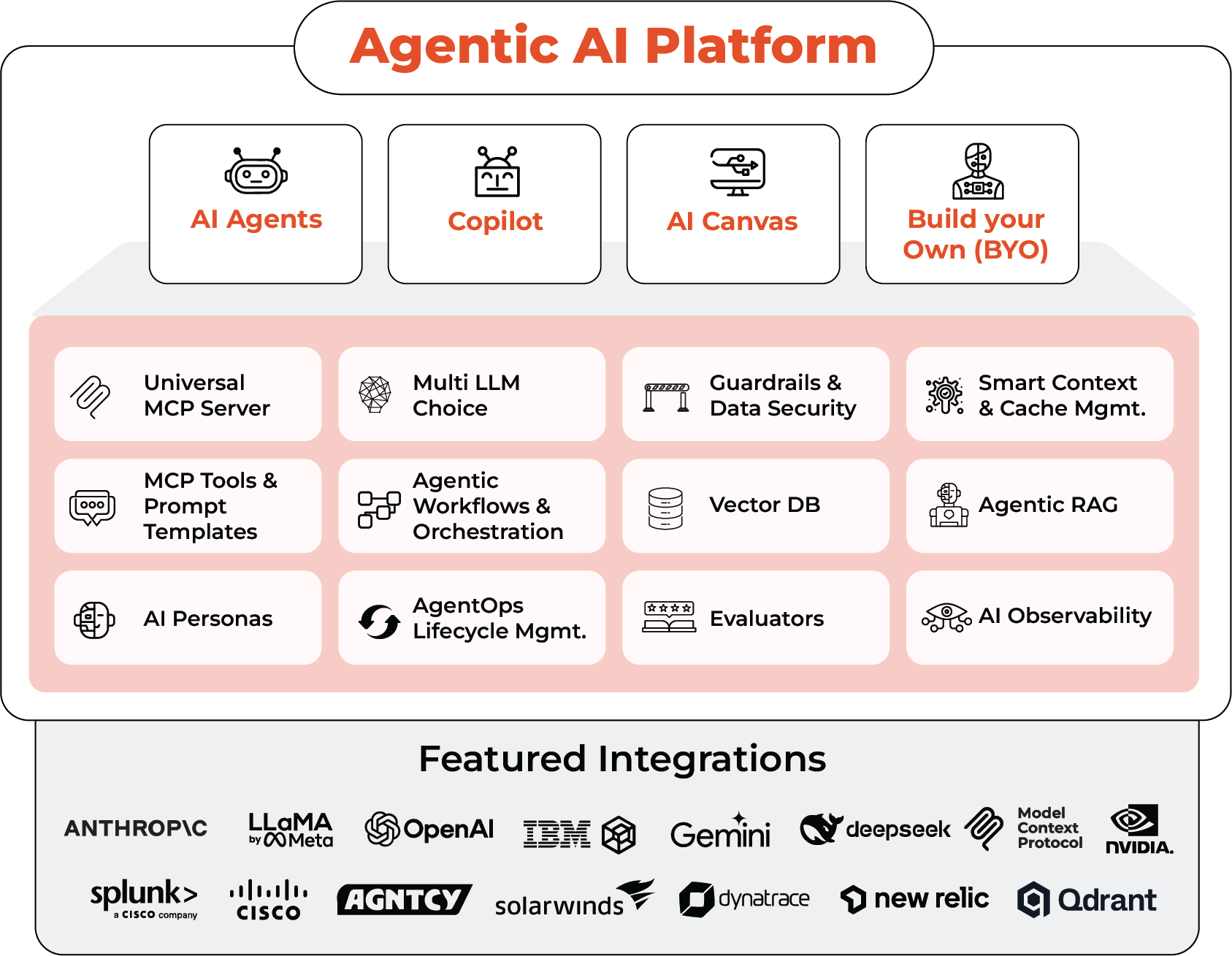

Agentic AI Platform

Operationalize AI Agents at Scale for Modern Autonomous Operations

Agentic AI Platform

for Modern Autonomous Operations

Operationalize AI Agents at Scale with Gen AI, Copilot, Agent Lifecycle Manager, Orchestrator, Guardrails, RAG, LLM integrations and more.

Enterprise Grade Capabilities

From build to observe - guardrailed, auditable agents engineered for enterprise scale.

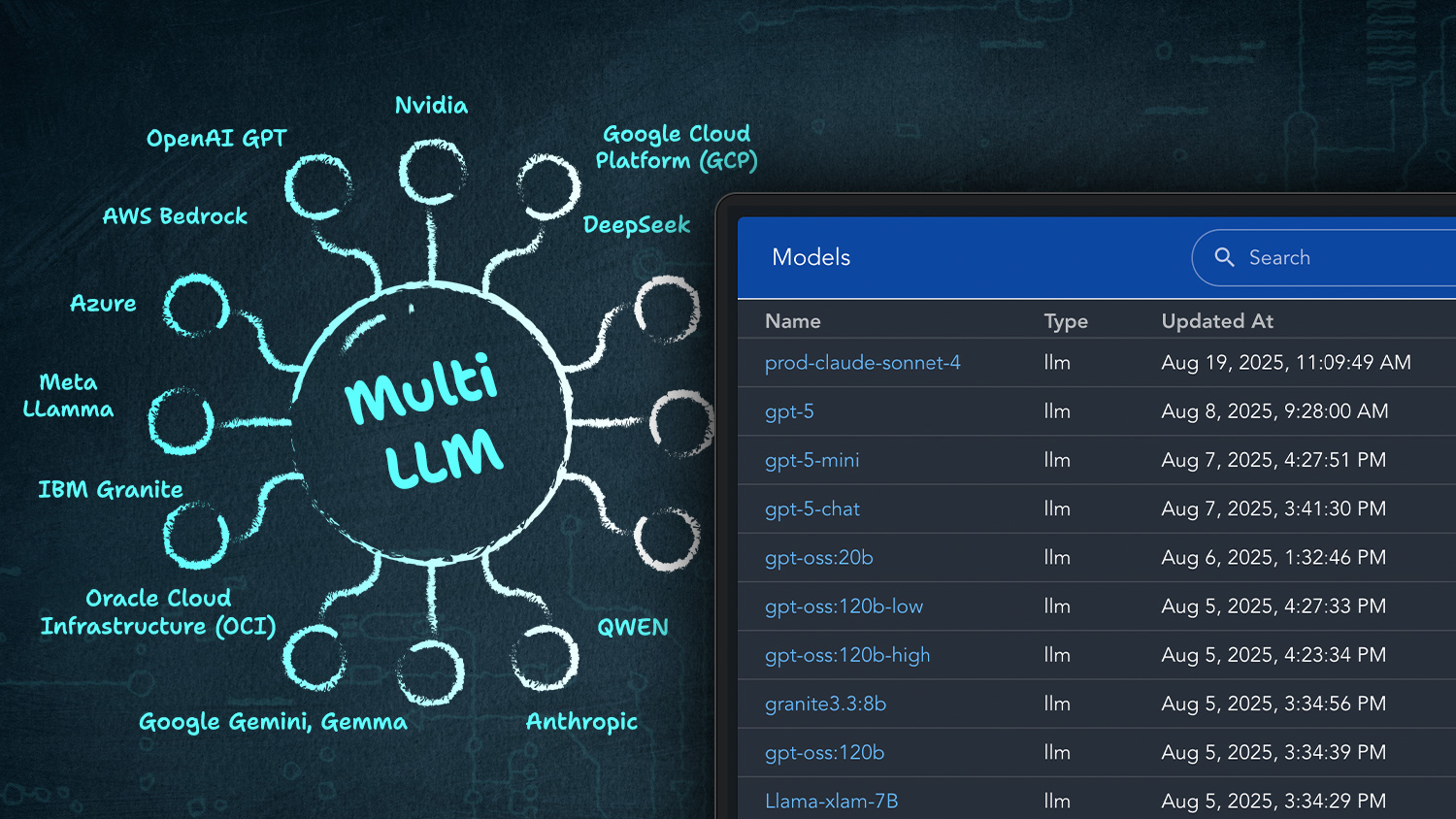

Support featured LLMs. On-Prem or Cloud. Seamless Integration. Nvidia Ready

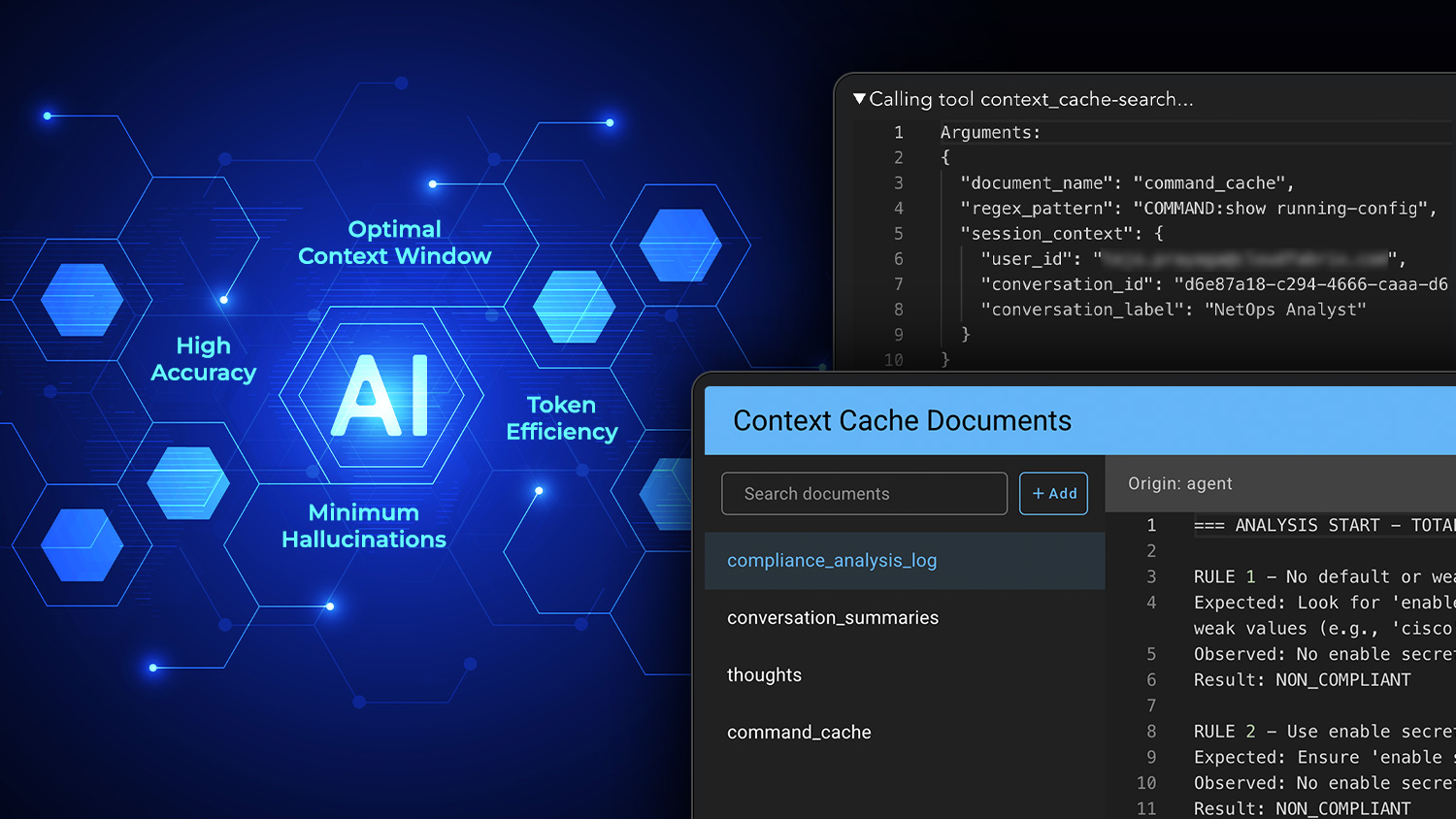

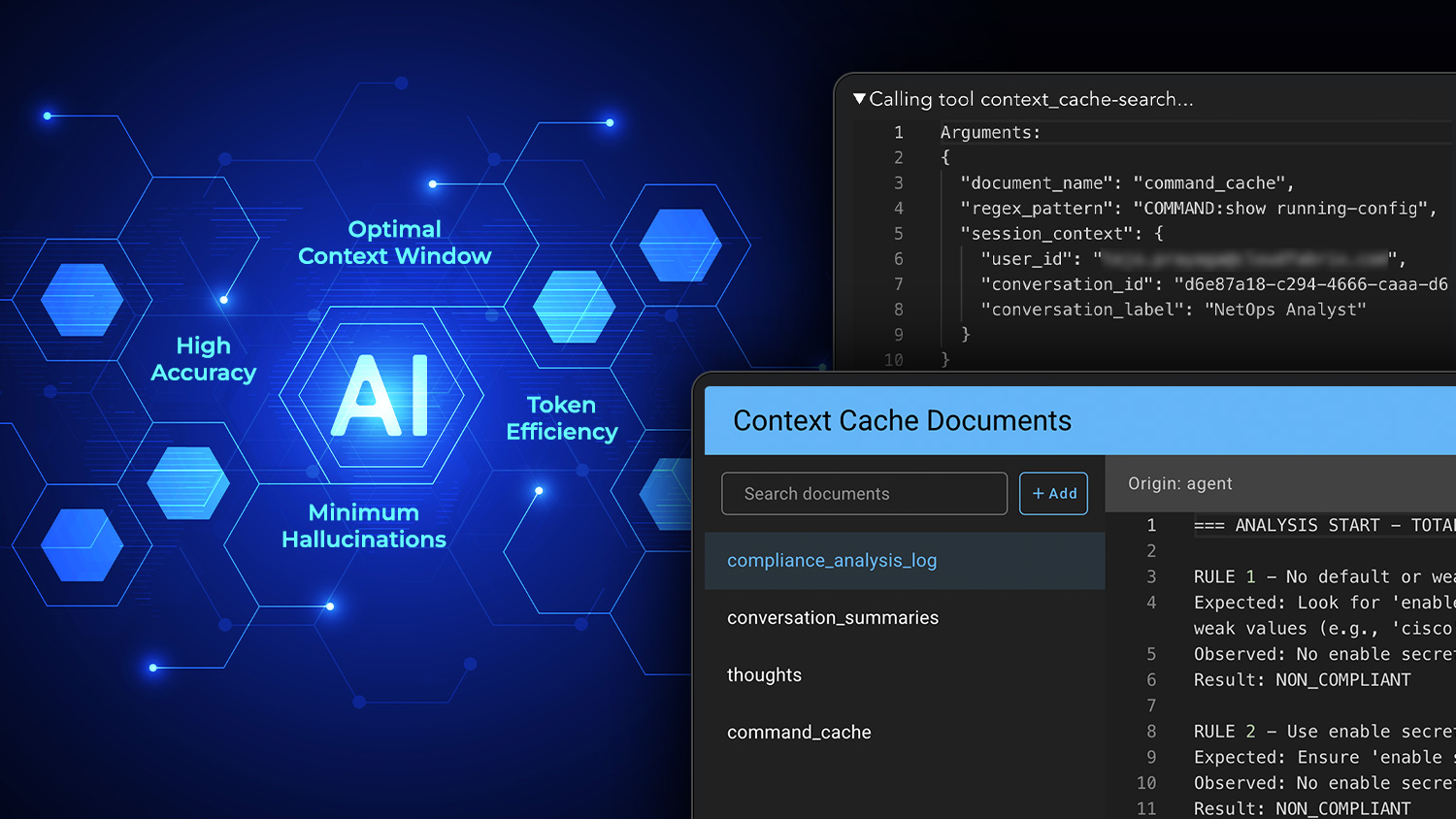

Provides context caching for optimal token usage, allows LLMs to work with very large datasets.

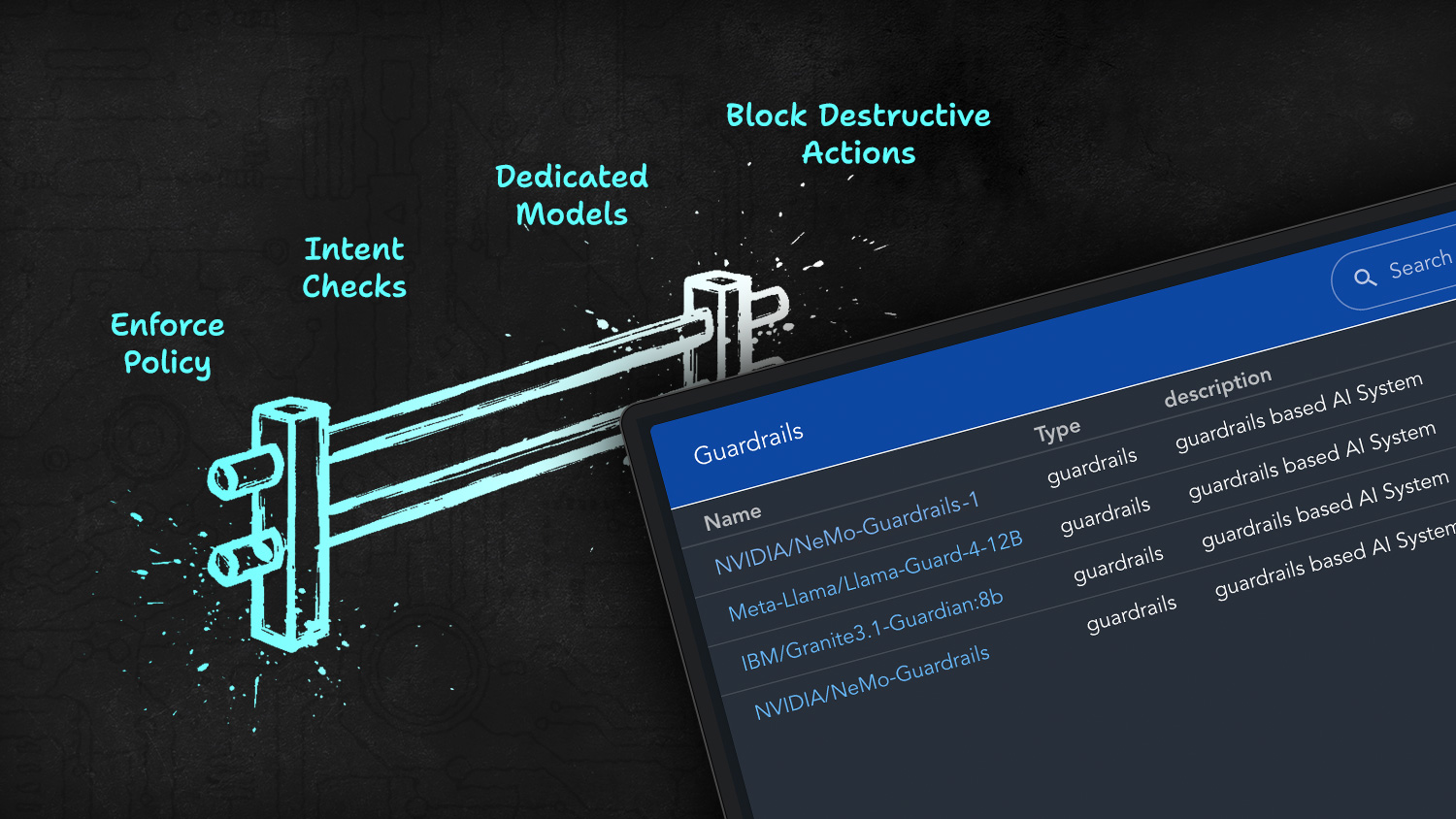

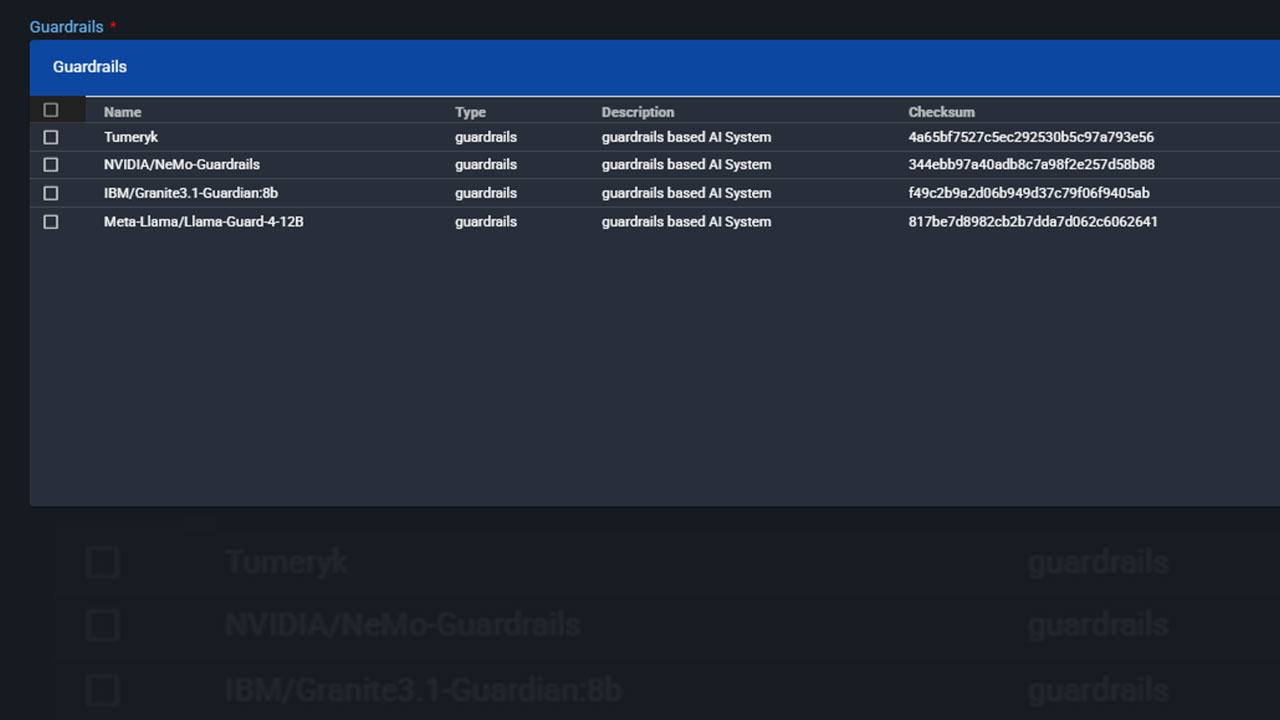

Enforce safety, policy, and intent checks on every run-blocking non-compliant prompts and destructive actions-via seamless integrations with dedicated models and providers

Per-persona data masking for Agentic AI. Sensitive fields are masked before prompts reach the LLM and optionally unmasked client-side when rendering results—end-to-end auditable

Allow LLM access to your data and tools using MCP protocol. Built-in MCP server. Dynamically add new MCP tools with no-code.

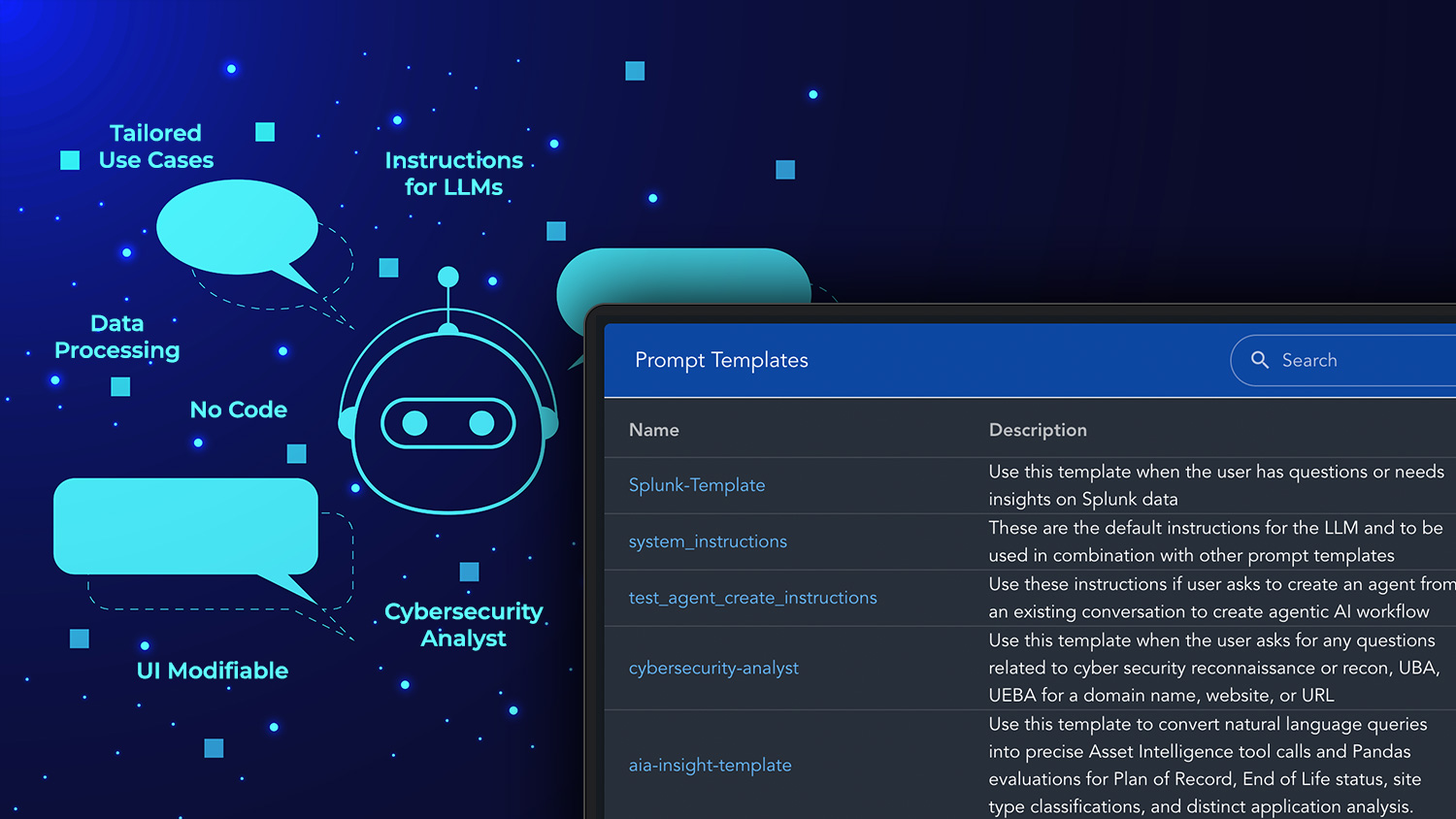

Set of instructions for LLMs to process data and results tailored to your use case. Modifiable from UI. No code.

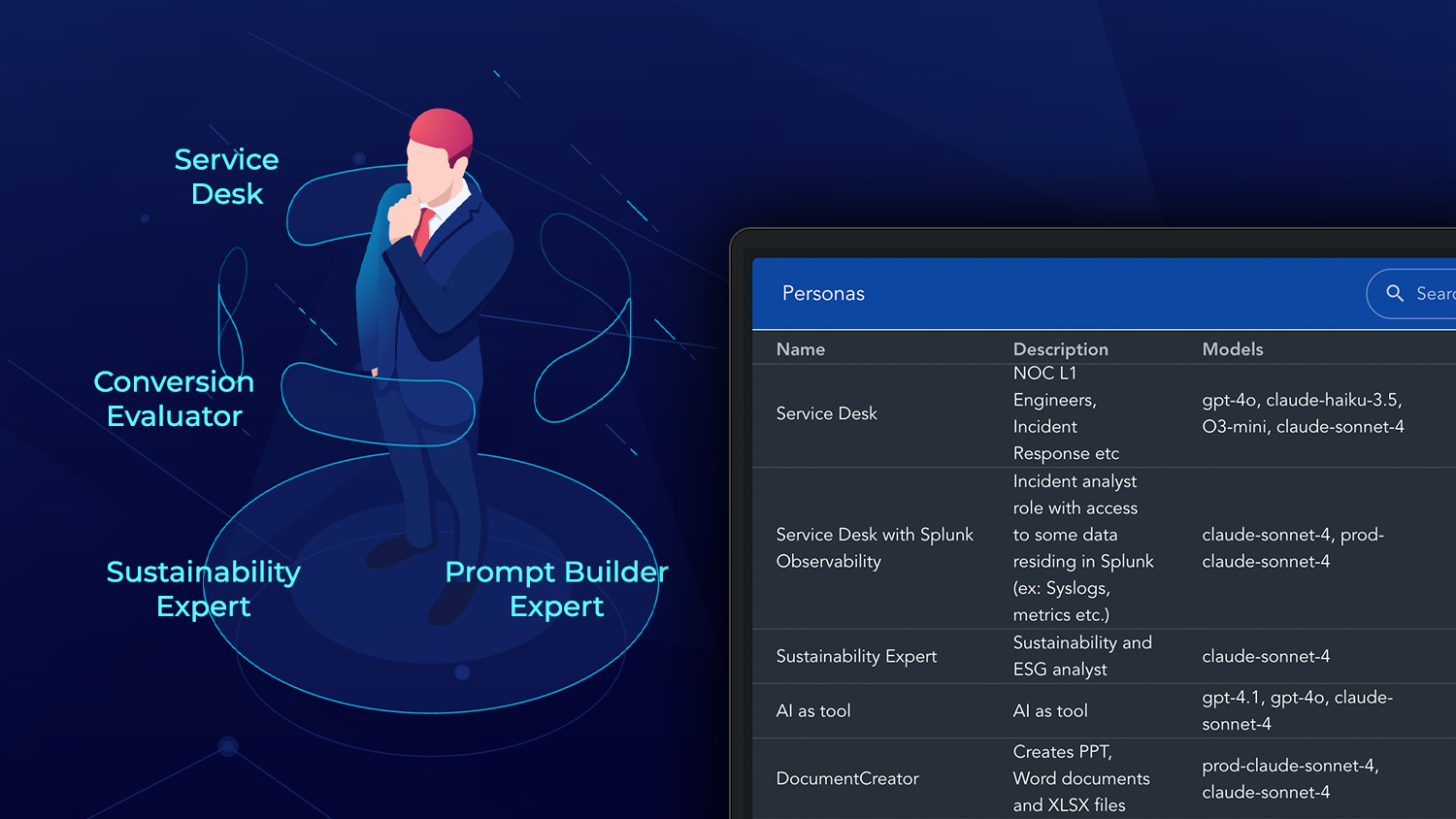

RBAC‑like scoping presents only persona‑relevant MCP tools and data to LLM, improving accuracy and governance.

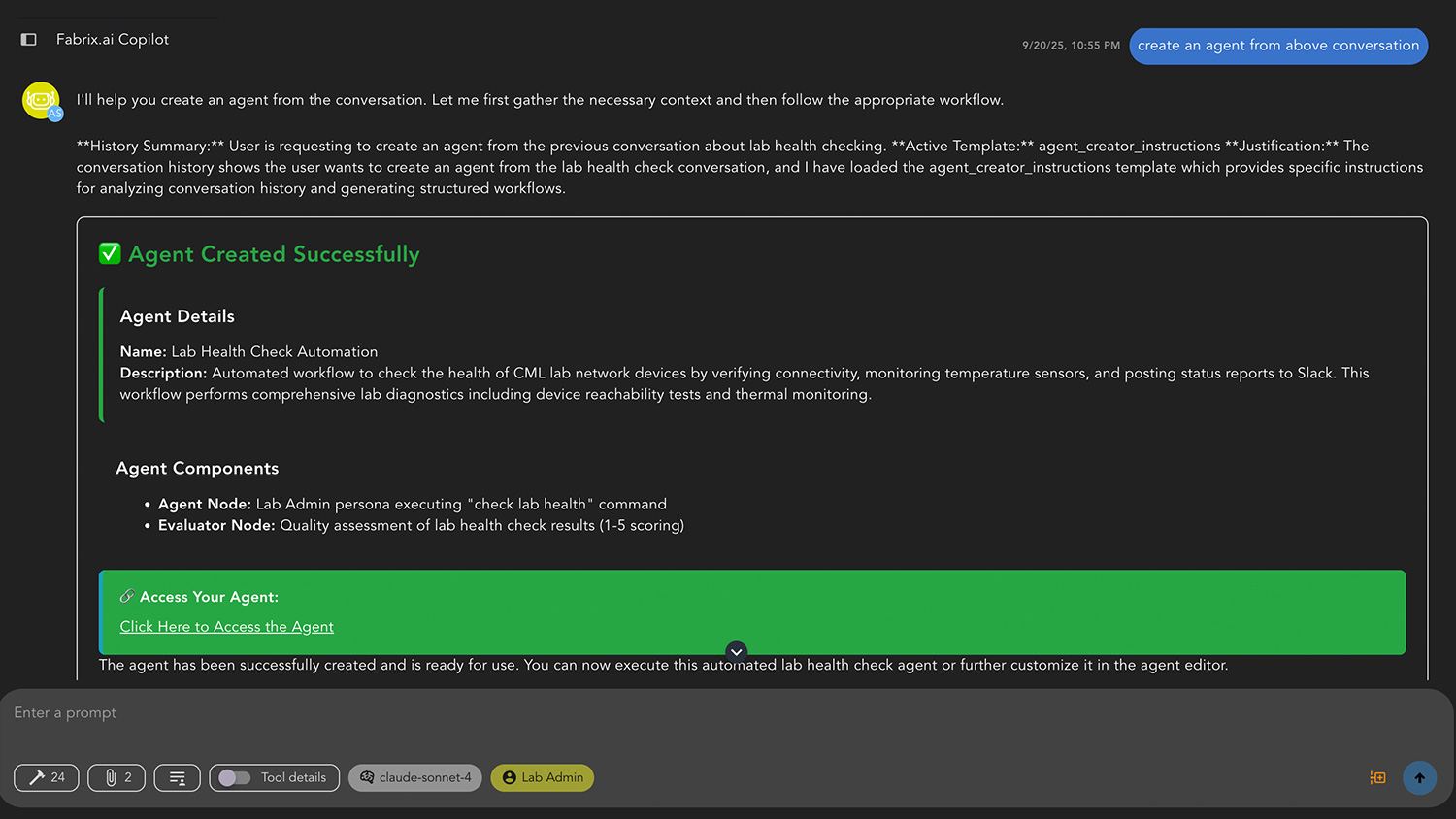

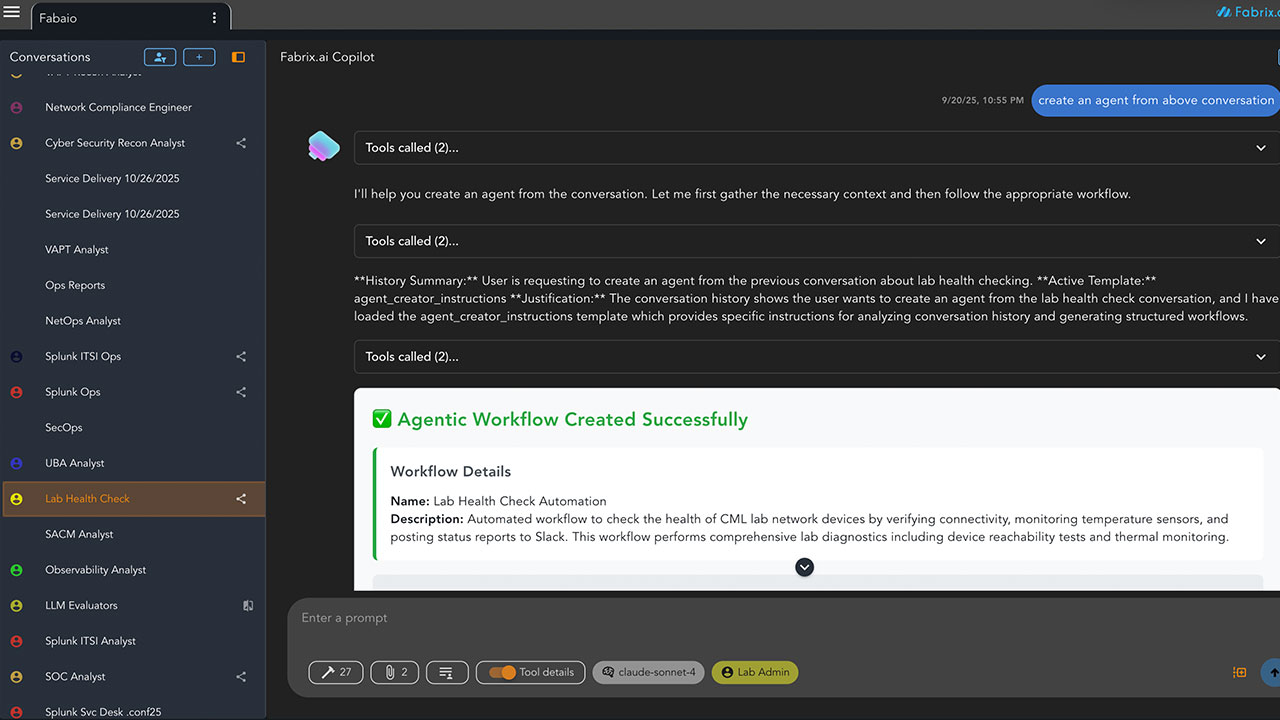

From prompt to production agent—prototype in Copilot, iterate, then simply ask to create Agent with persona, tools, prompts, and workflow auto-packaged

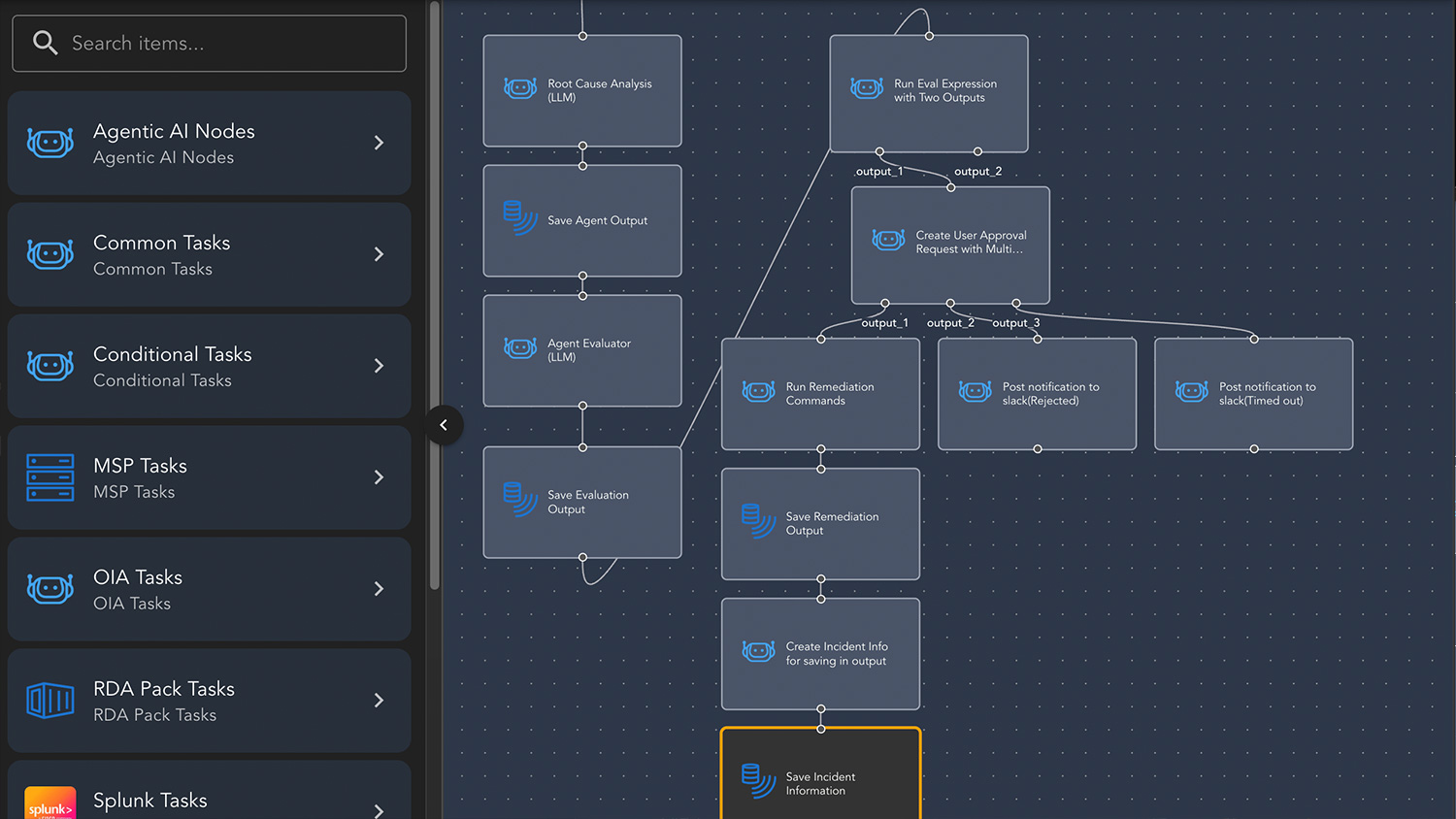

No-code, drag-and-drop approach to easily build and operate agentic workflows. Built-in task library.

AgentOps - Operationalizing the Agentic Platform

Build • Operate • Observe — only on Fabrix.ai

Build Agents

Effortlessly create your own agent with a prompt or start from a template. Customize & iterate or activate right away.

- Prompt-to-Agent

- Start with templates

- Multi-agent interactions

- Agent integration with RAG

Context & Cache Management

Acts as a high-speed intelligent cache that supplies your AI agents by overcoming context window limitations and processing the real-time context from massive datasets..

- Increase Accuracy - Anchors agent responses to eliminate hallucinations and improve reliability.

- Cost Reduction - Reduces token consumption and latency by curating data before it reaches LLM.

Universal MCP Server

Bridge the gap between AI agents and legacy infrastructure without manual refactoring or the need for connectors. Our platform auto-generates Model Context Protocol (MCP) tools, turning your existing APIs and databases into agent-ready assets in minutes.

- Interoperability across different AI models and tools

- Zero-Code Tool Generation

Security & Governance

Protect your sensitive enterprise data and enforce strict compliance by providing granular control over what agents can see and do, preventing unauthorized actions.

- Guardrails & PII Protection: Real-time masking of sensitive data

- Role-Based Access Control: Define precise permissions for users and agents

- Comprehensive Audit Trails: Complete log of every agent interaction for compliance and forensic purposes.

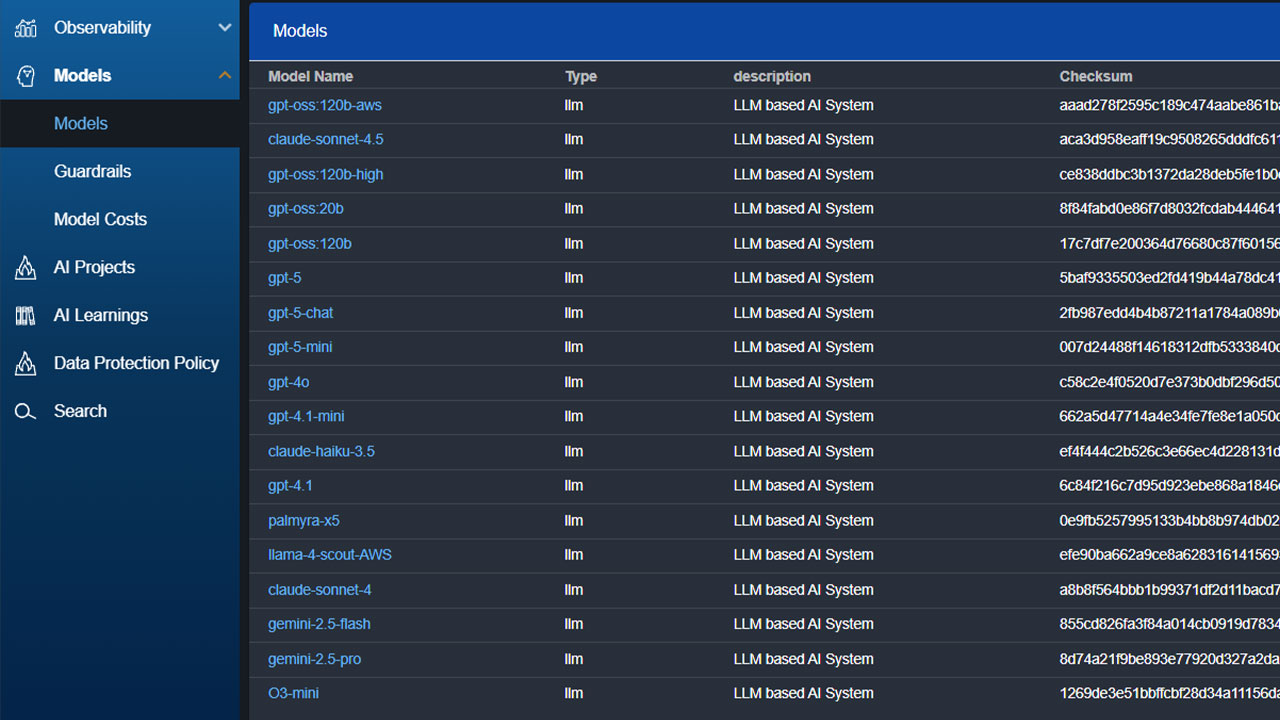

Multi-LLM Flexibility

Gain complete control over your AI architecture by choosing the exact model that fits your security, cost, and performance requirements for every specific task.

- Universal Model Support - Seamlessly integrate any AI models, including open-source options and efficient Small Language Models (SLMs).

- Bring Your Own Model (BYOM) - Deploy your proprietary or fine-tuned internal models to keep sensitive data under your control.

- Cost & Performance Routing - Automatically route prompts to the most optimal model based on complexity, latency requirements, and budget constraints.

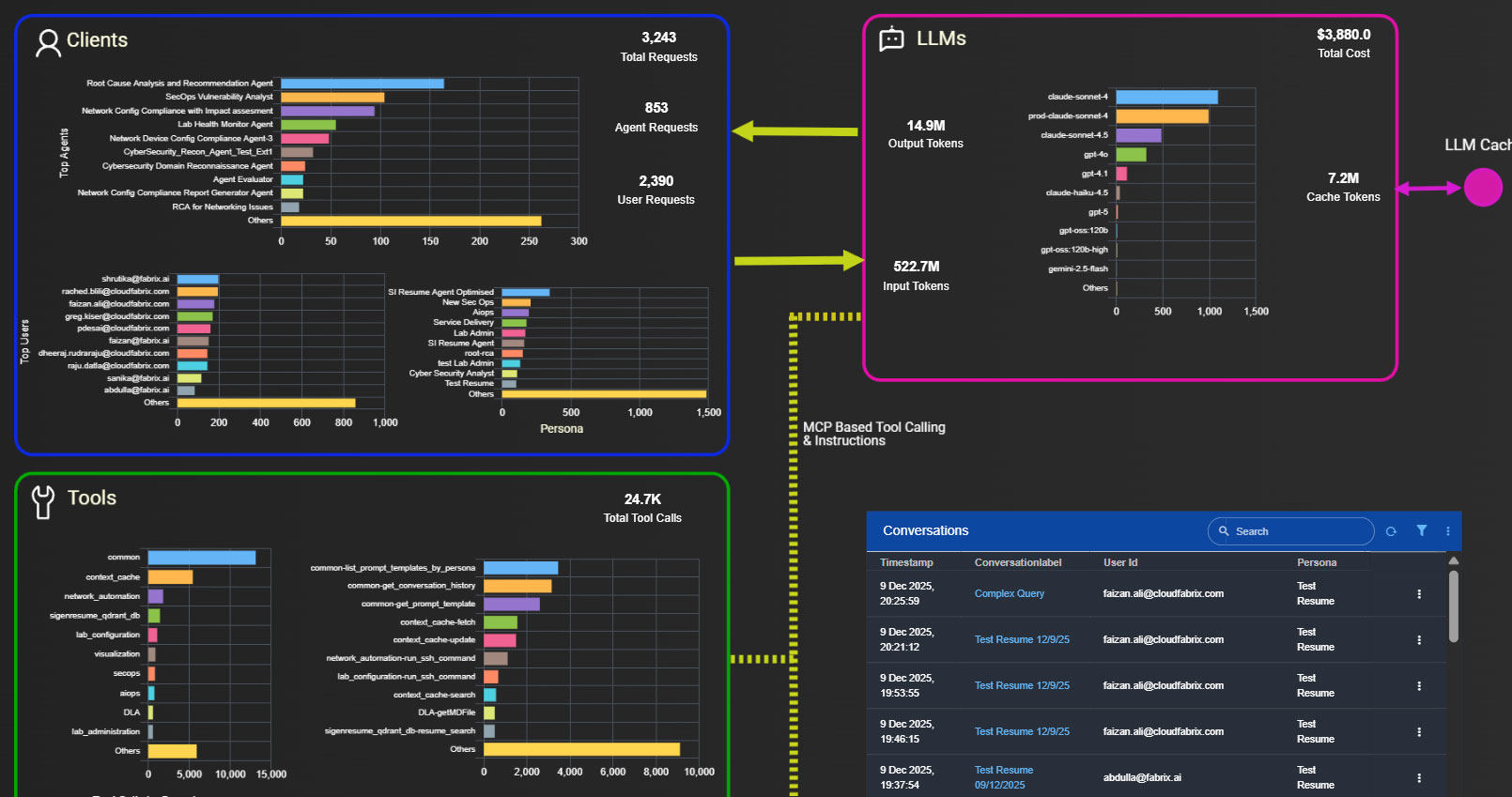

AI Observability

Get a unified view of your entire AI estate with a dashboard that tracks usage, spend, and outcomes across every team and application.

- Visualize spend and token usage across all providers, broken down by department or project.